After 18 months of work, we are now closing the research projet « Move Your Story » with our partner TAM of the University of Geneva.

The project was funded end 2012 by the CTI, with the goal to develop an algorithm based on the sensors of a smartphone in order to drastically improve the results of our mobile application Memowalk.

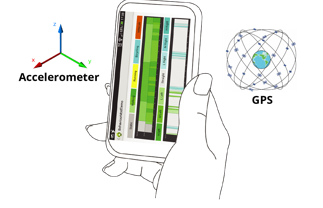

Abstract—Interacting with smartphones generally requires direct input from the user. We investigated a novel way based on the user’s behaviour to interact directly with a phone. (…) We present MoveYourStory, a mobile application that generates a movie composed of small video clips selected according to the user’s position and his current behaviour. Towards this end, we have implemented an activity recognition module that is able to recognise current activities, like walking, bicycling or travelling in a vehicle using the accelerometer and the GPS embedded in a smartphone. Moreover, we added different walking intensity levels to the recognition algorithm, as well as the possibility of using the application in any position. A user study was done to validate our algorithm. Overall, we achieved 96.7% recognition accuracy for walking activities and 87.5% for the bicycling activity.

Jody Hausmann, Kevin Salvi, Jérôme Van Zaen, Adrian Hindle and Michel Deriaz, User Behaviour Recognition for Interacting with an Artistic Mobile Application, in Proceedings of the 4th International Conference on Multimedia Computing and Systems (ICMCS), Marrakesh, Morocco, April 2014

The activity & behavior detection will « help » the editing engine by providing a behavioral pattern that will be translated into a editing pattern. More on this part in a upcoming post.

| Activities | Orientation | Behaviours |

| Static | Low left | Regular |

| Walking slowly | Medium left | Visiting – calm |

| Walking | High left | Chaotic |

| Walking fast | Low right | Sportive |

| Running | Medium right | |

| Vehicle | High right | |

| Bicycle |

Gesture recognition is a promising approach to detect user interest as it does not require visual attention from the user compared to traditional techniques. This is especially true for mobile devices which usually are not the focus of user attention and whose screens can be quite small. Furthermore, it is often faster and more efficient to perform a gesture than to navigate through interface menus. Experimental tests have shown that our system achieves high recognition accuracy in both subject-dependent and subject-independent scenarios when detecting pointing gestures.

Jérôme Van Zaen, Jody Hausmann, Kevin Salvi and Michel Deriaz, Gesture Recognition for Interest Detection in Mobile Applications, in Proceedings of the 4th International Conference on Multimedia Computing and Systems (ICMCS), Marrakesh, Morocco, April 2014.

The following gesture can be automatically detected when the user is performing a movement with his device. The gesture recognition module was calibrated and adapted to determine the right movement among others movement like walking. Moreover, it can recognize if the gesture was done with the left of the right hand.

The module analyse data from the accelerometer and the gyroscope. The gesture is then recognized thanks to a machine learning algorithm which was trained by six subjects who perform during 5 days a total of 3600 gestures recording.

|

Device in the left hand |

Device in the right hand |

|

Answering call |

Answering call |

|

Pointing forward |

Pointing forward |

|

Pointing on the left |

Pointing on the left |

|

Pointing on the right |

Pointing on the right |

|

Looking at the screen* |

Looking at the screen* |

|

Checking the time** |

Checking the time** |

* Movement starting with the device in a pocket and ending around the user’s eye high level

** Like the movement “Looking at the screen”, but ending at half high level